5 Ultimate Ways To Create A Naive Dropout In Rnn Today

Introduction

Recurrent Neural Networks (RNNs) are powerful tools for processing sequential data, making them suitable for various tasks such as natural language processing, speech recognition, and time series analysis. However, training RNNs can be challenging due to the vanishing and exploding gradient problems, which hinder the network’s ability to learn long-term dependencies. One effective technique to address these issues is the use of a dropout mechanism, which introduces regularization and prevents overfitting. In this blog post, we will explore five ultimate ways to create a naive dropout in RNNs, enhancing their performance and generalization capabilities.

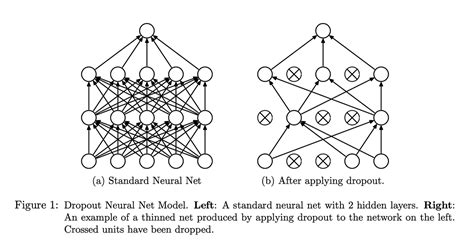

Understanding Dropout

Dropout is a regularization technique widely used in deep learning to prevent overfitting and improve the generalization of neural networks. It works by randomly setting a fraction of input units to zero during training, effectively “dropping out” these units and their connections. This process helps to reduce the reliance on any specific input unit and encourages the network to learn more robust representations.

Implementing Dropout in RNNs

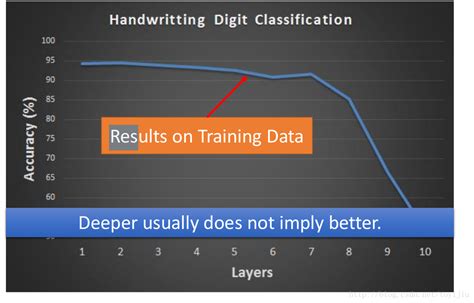

When it comes to RNNs, dropout can be applied at various levels, including the input, hidden, and output layers. By strategically incorporating dropout, we can mitigate the vanishing and exploding gradient problems and improve the network’s ability to capture long-term dependencies. Here are five effective ways to create a naive dropout in RNNs:

1. Input Dropout

Input dropout, also known as input-level dropout, involves applying dropout to the input layer of the RNN. This technique randomly sets a portion of the input units to zero before feeding them into the network. By doing so, we introduce stochasticity and prevent the network from relying too heavily on specific input features. Input dropout is particularly useful when dealing with high-dimensional input data, as it helps to reduce the impact of irrelevant or redundant features.

2. Recurrent Dropout

Recurrent dropout, or hidden-level dropout, is applied to the hidden layers of the RNN. During training, a fraction of the hidden units are randomly dropped out, along with their incoming and outgoing connections. This approach helps to regularize the hidden representations and prevent the network from memorizing specific patterns in the training data. Recurrent dropout is especially beneficial when the RNN has a large number of hidden units, as it promotes the learning of diverse and robust hidden representations.

3. Zoneout

Zoneout is a variant of dropout specifically designed for RNNs. Unlike traditional dropout, which drops out entire units, zoneout applies dropout to individual time steps within the RNN. During training, a fraction of the hidden states at each time step is randomly set to the previous time step’s hidden state. This approach helps to maintain the temporal coherence of the hidden states while still introducing regularization. Zoneout has been shown to be effective in improving the performance of RNNs, especially in tasks involving long-term dependencies.

4. Layer-wise Dropout

Layer-wise dropout is a technique that applies dropout independently to each layer of the RNN. This approach allows for different dropout rates to be applied to different layers, providing more fine-grained control over regularization. By assigning higher dropout rates to specific layers, we can encourage the network to learn more robust representations at those layers. Layer-wise dropout can be particularly useful when dealing with complex RNN architectures or when certain layers are more prone to overfitting.

5. Scheduled Dropout

Scheduled dropout, also known as time-dependent dropout, involves dynamically adjusting the dropout rate during training. Instead of using a fixed dropout rate throughout the training process, we can schedule the dropout rate to gradually increase or decrease over time. This technique allows the network to focus more on learning the underlying patterns during the initial stages of training and gradually introduce more regularization as the training progresses. Scheduled dropout can help strike a balance between learning and regularization, improving the network’s performance and generalization.

Choosing the Right Dropout Strategy

When implementing dropout in RNNs, it is essential to consider the characteristics of the task and the dataset. Different dropout strategies may work better for different scenarios. Here are some guidelines to help you choose the appropriate dropout strategy:

- Input Dropout: Consider input dropout when dealing with high-dimensional input data or when you want to reduce the impact of irrelevant features. It is particularly useful for tasks such as natural language processing, where the input sequence can be long and sparse.

- Recurrent Dropout: Recurrent dropout is suitable for tasks that require capturing long-term dependencies, such as language modeling or machine translation. It helps to regularize the hidden representations and prevent the network from overfitting to specific patterns.

- Zoneout: Zoneout is a powerful technique for tasks involving long-term dependencies, especially when the network needs to maintain temporal coherence. It has shown promising results in various sequential tasks, including speech recognition and music generation.

- Layer-wise Dropout: Layer-wise dropout provides flexibility in regularizing specific layers of the RNN. It is beneficial when you want to control the dropout rate independently for each layer, allowing for a more tailored regularization strategy.

- Scheduled Dropout: Scheduled dropout can be advantageous when dealing with complex tasks or datasets. By gradually adjusting the dropout rate, you can balance learning and regularization, leading to improved performance and generalization.

Tips for Effective Dropout Usage

To make the most of dropout in RNNs, consider the following tips:

- Start with a Low Dropout Rate: Begin with a relatively low dropout rate, such as 0.1 or 0.2, and gradually increase it if needed. Starting with a high dropout rate may hinder the network’s learning process, especially during the initial stages of training.

- Experiment with Different Dropout Rates: Try experimenting with various dropout rates for different layers or time steps. Fine-tuning the dropout rates can lead to better performance and generalization.

- Monitor Validation Performance: Regularly evaluate the performance of your RNN on a validation set to ensure that dropout is not hurting the network’s ability to generalize. Adjust the dropout rates or strategies if necessary.

- Combine Dropout with Other Regularization Techniques: Dropout can be effectively combined with other regularization techniques, such as L1 or L2 regularization, to further improve the network’s performance and prevent overfitting.

Conclusion

In this blog post, we explored five ultimate ways to create a naive dropout in RNNs. By applying dropout at different levels, such as input, hidden, and output layers, we can enhance the network’s ability to capture long-term dependencies and improve its generalization capabilities. Input dropout, recurrent dropout, zoneout, layer-wise dropout, and scheduled dropout are powerful techniques that offer flexibility and control over regularization. When choosing a dropout strategy, consider the characteristics of your task and dataset, and experiment with different configurations to find the optimal setup. With proper implementation and fine-tuning, dropout can significantly improve the performance of your RNN models.

FAQ

What is the purpose of dropout in RNNs?

+Dropout is a regularization technique used in RNNs to prevent overfitting and improve generalization. It helps the network learn more robust representations by randomly dropping out input units or hidden units during training.

How does input dropout work in RNNs?

+Input dropout applies dropout to the input layer of the RNN. It randomly sets a portion of the input units to zero before feeding them into the network, reducing the impact of irrelevant features and introducing stochasticity.

What is the benefit of using recurrent dropout in RNNs?

+Recurrent dropout applies dropout to the hidden layers of the RNN. It helps regularize the hidden representations, preventing the network from memorizing specific patterns and improving its ability to capture long-term dependencies.

How does zoneout differ from traditional dropout in RNNs?

+Zoneout is a variant of dropout specifically designed for RNNs. Instead of dropping out entire units, zoneout applies dropout to individual time steps, maintaining temporal coherence while introducing regularization.

When should I use layer-wise dropout in RNNs?

+Layer-wise dropout is useful when you want to apply different dropout rates to specific layers of the RNN. It provides flexibility in regularizing different layers independently, allowing for a more tailored regularization strategy.